Introduction to AI in Product Catalog Management

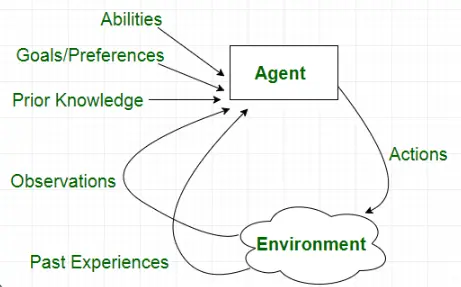

Product catalog management has been greatly enhanced by the integration of Artificial Intelligence. Artificial intelligence enables companies to automate numerous tasks, improve data accuracy, and provide a more personalized experience to customers. With AI-driven catalog management tools, categorization, tagging, and inventory updates become more efficient and error-free. By minimizing human errors, AI ensures higher data accuracy and consistency. AI analyzes market trends and demand fluctuations to optimize pricing strategies. By streamlining cataloging tasks, AI in automated product catalog management allows businesses to manage large volumes of product data swiftly and effectively.

As the e-commerce landscape becomes increasingly competitive, leveraging AI in catalog management offers a significant advantage, helping businesses stay ahead by improving operational efficiency and customer satisfaction.

Future Trends of AI in Product Catalog Management

Initially, product catalog management was a manual, time-consuming process prone to human errors. The introduction of basic automation tools began to alleviate some of these burdens, but it wasn't until the advent of AI that significant improvements were realized.

Potential Applications beyond 2024

1. Automated Product Categorization and Tagging

As products are automatically categorized and tagged based on the product metadata and attributes, labor is reduced and errors are minimized. This leads to more organized catalogs and enhances searchability for customers. For example, in an online electronics store, AI can analyze product descriptions, specifications, and customer reviews to accurately categorize items such as laptops, smartphones, and accessories. This automated process ensures that each product is tagged correctly with relevant attributes like brand, model, features, and compatibility, making it easier for customers to find exactly what they're looking for without sifting through irrelevant items.

2. Dynamic pricing optimization

AI algorithms analyze market trends, competitor pricing, and demand fluctuations to optimize pricing strategies. This ensures competitive pricing while maximizing profit margins. According to a McKinsey report, dynamic pricing driven by AI can increase revenue by 2-5%. For example, in the retail industry, AI algorithms analyze market trends, competitor pricing, and demand fluctuations to optimize pricing strategies. Suppose a retailer notices that a particular product is trending and in high demand. The AI system can suggest adjusting the price based on this demand, competitor prices, and historical sales data. If competitor prices are higher and demand is surging, the AI might recommend a slight price increase to maximize profit. Conversely, if demand drops or competitors lower their prices, the AI can suggest a price reduction to stay competitive. According to a McKinsey report, AI-powered dynamic pricing can increase revenue by 2-5%.

3. Personalized product recommendations

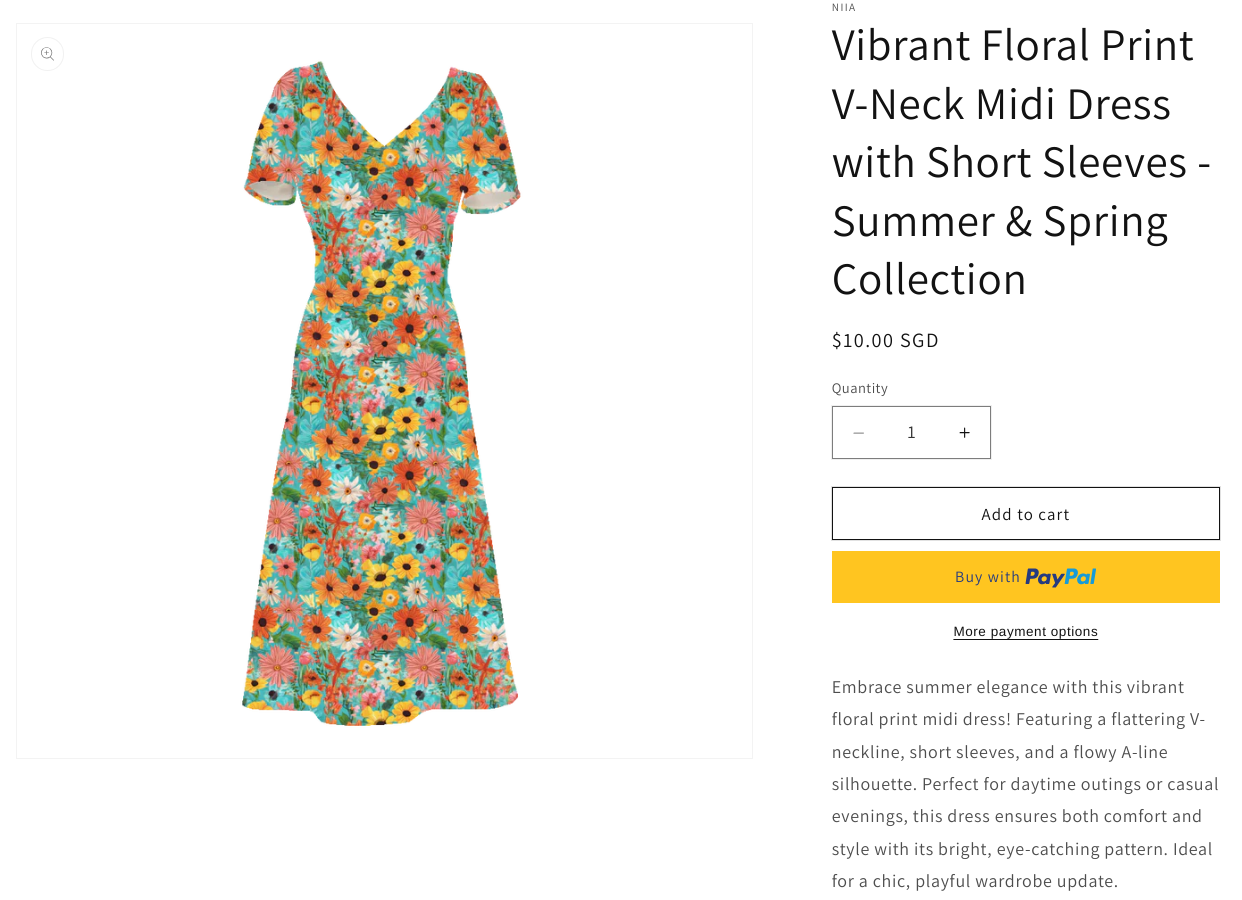

The use of artificial intelligence increases sales and enhances customer satisfaction by providing personalized product recommendations based on customer data. Businesses leveraging AI for personalization have seen a 15% uplift in sales on average, as reported by BCG. For example, in the online fashion retail industry, AI analyzes customer browsing and purchase history to recommend clothing items tailored to individual preferences. If a customer frequently buys athletic wear, the AI might suggest new arrivals in sportswear or notify the customer about upcoming sales on these items. This personalized approach not only increases the likelihood of purchase but also enhances the overall shopping experience.

4. Intelligent Search and Discovery

AI-powered search engines understand natural language queries and context, delivering more accurate and relevant search results to users. Gartner predicts that by 2025, AI will handle 80% of all customer interactions. For example, in the e-commerce industry, if a customer searches for "comfortable running shoes for flat feet," an AI-powered search engine can interpret the specific requirements and deliver tailored results, such as running shoes with extra arch support and cushioning. This level of understanding and accuracy not only helps customers find exactly what they need but also enhances their overall shopping experience, leading to higher satisfaction and increased sales.

5. Improve Data Privacy and Security

AI-powered catalog management systems enhance data privacy and security by identifying sensitive information and enforcing governance policies. AI algorithms analyze metadata to ensure compliance with legal requirements, safeguarding data integrity and confidentiality. For example, in a healthcare organization using AI-powered catalog management, sensitive patient data such as medical histories and personal information can be automatically identified and tagged with strict access controls. This ensures that only authorized personnel can view or update sensitive information, maintaining compliance with regulations like HIPAA and GDPR while protecting patient privacy.

6. AI-Enabled Analytics

AI in Product Catalog Management leverages powerful analytics to identify patterns and shifts in customer behavior, enabling data-driven decisions that enhance online sales. eCommerce analytics encompass metrics across the entire customer journey, from discovery and conversion to retention and advocacy. For instance, in the fashion industry, AI can analyze customer preferences and buying patterns to recommend trending clothing items. If the data shows an increasing interest in summer dresses, the AI can suggest prominently displaying these items, leading to higher conversions and improved sales.

Preparing for the AI-Driven Future

Skills and Training Needed

Organizations must invest in training their workforce to handle AI tools and interpret AI-driven insights. Upskilling employees will be crucial for leveraging AI effectively. A LinkedIn report indicates that AI skills are among the top five in-demand skills globally.

Organizational Changes

Implementing AI may require structural changes within the organization. Companies need to foster a culture of innovation and adaptability to embrace AI technologies fully. IBM reports that 61% of high-performing companies have adopted a culture of AI and innovation.

Investment Considerations

Investing in AI infrastructure and tools is essential for long-term benefits. Companies should evaluate the cost-benefit ratio and plan their investments strategically. According to Accenture, AI investments are expected to boost profitability by an average of 38% by 2035.

Case Studies

Zara

As a global fashion retailer, Zara uses AI to enhance its product catalog management, particularly in automated inventory management and supply chain management. As a result of the use of artificial intelligence algorithms, Zara is able to accurately predict fashion trends and customer preferences, enabling them to plan inventory more effectively and turn around orders more quickly.

Trend Analysis and Forecasting:

AI helps Zara analyze vast amounts of data from social media, customer feedback, and sales trends to identify emerging fashion trends. This enables Zara to quickly adapt its product offerings to meet customer demands, ensuring that its catalog remains relevant and appealing.

Personalized Shopping Experience:

Zara uses AI to provide personalized shopping experiences for its customers. By analyzing browsing behavior and purchase history, Zara's AI-powered recommendation system suggests products that align with individual preferences. This personalized approach has been instrumental in increasing customer loyalty and driving repeat purchases.

Conclusion

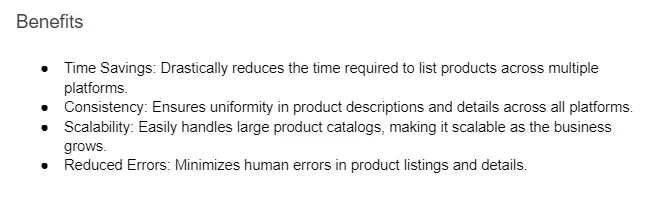

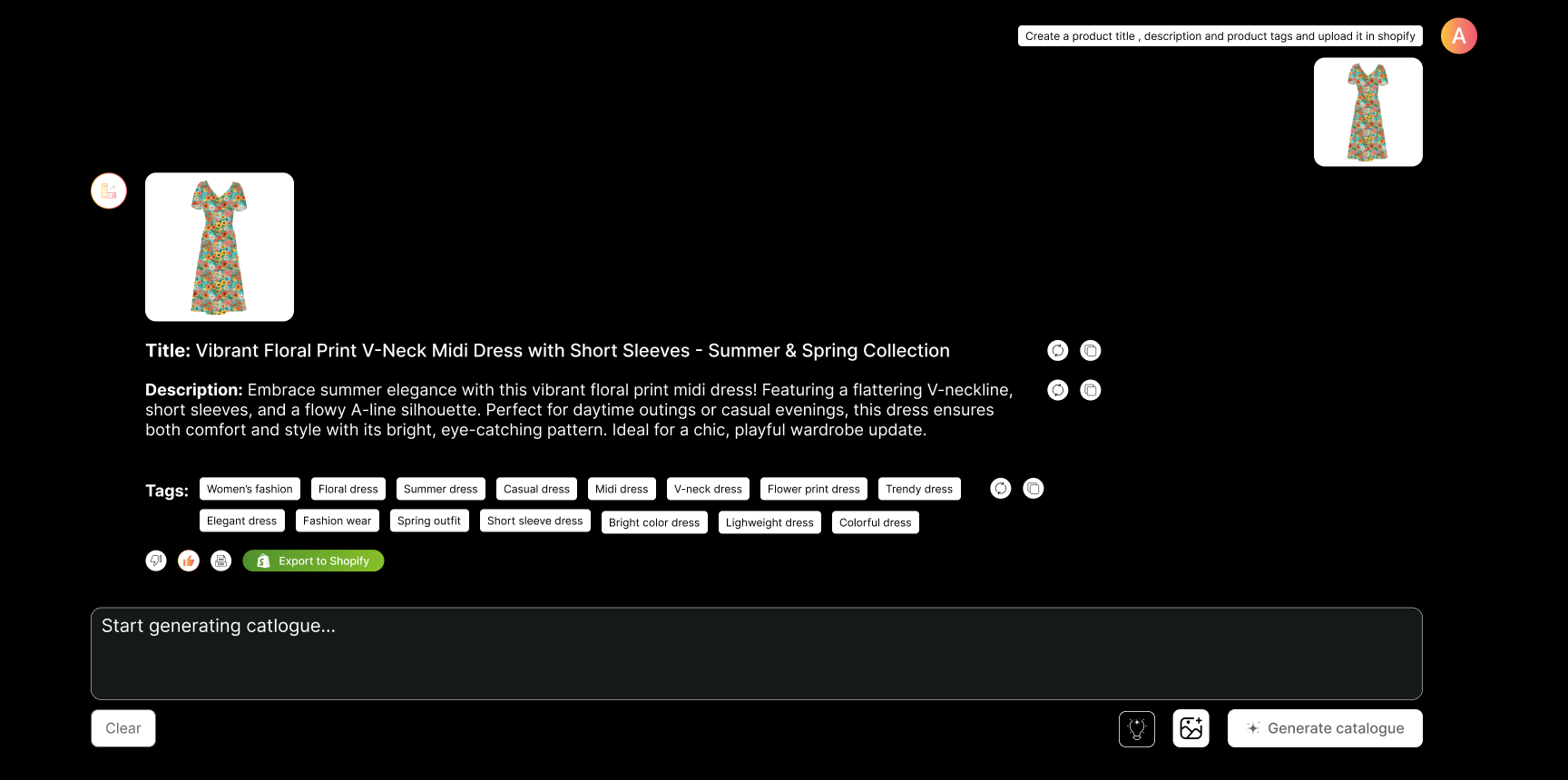

With AI, processes are automated, data accuracy is improved, and customer experiences are enhanced. The benefits of AI outweigh challenges such as data quality and integration. Continuing to evolve, AI will become increasingly sophisticated in its applications for catalog management. Businesses that invest in AI today will be well-positioned to lead the market in the future. By embracing AI, companies can streamline catalog management processes, enhance customer satisfaction, and improve operational efficiency. Explore the capabilities of the Navan AI platform today to see how it can revolutionize your catalog management and customer engagement strategies.