Introduction:

One of the best frameworks available to developers who want to design applications with LLM capabilities is LangChain. It makes it easier to organise enormous amounts of data so that LLMs may access it quickly and enables LLM models to provide responses based on the most recent data that is available online.

This is how developers may create dynamic, data-responsive applications with LangChain. Thus far, developers have been able to produce some quite sophisticated AI chatbots, generative question-answering (GQA) systems, and language summary tools thanks to the open-source platform.

How does LangChain work?

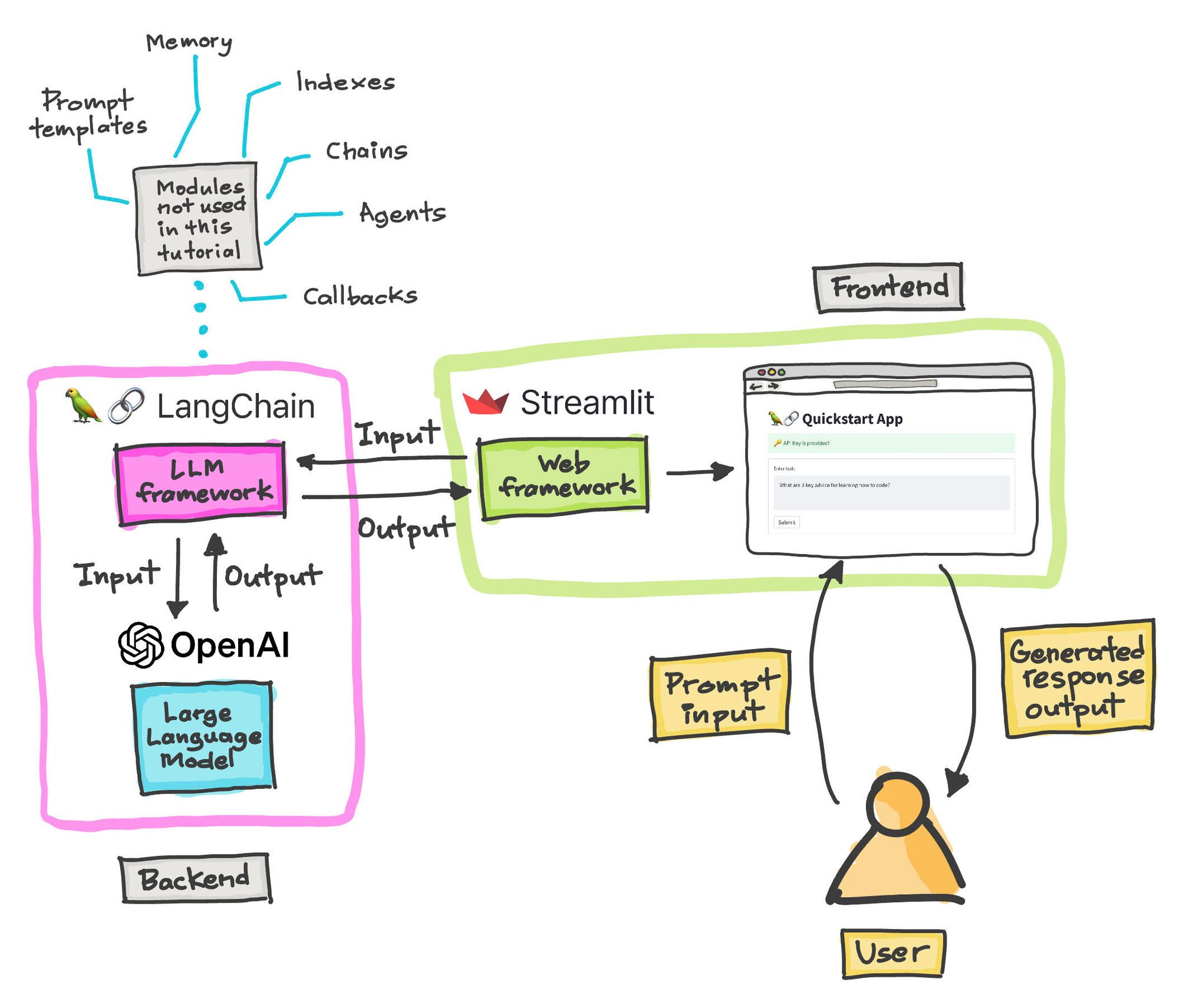

With the help of the open-source LangChain framework, developers can design applications that make use of large language models (LLMs). LangChain is essentially a prompt orchestration tool that facilitates teams' participatory connection-building across different prompts.

Although LangChain started off as an open source initiative, Harrison Chase soon became its CEO and the project swiftly grew to become a firm.

It is similar to getting a complete response for a single request when LLMs (like GPT3 or GPT4) provide a completion for a single prompt. You could instruct the LLM to "create a sculpture," for instance, and it would comply. More complex instructions, such as "create a sculpture of an axolotl at the bottom of a lake," are also acceptable. The LLM will probably give you what you requested.

But what if you put this question in its place:

"Tell me how to carve an axolotl sculpture out of wood, step by step."

You can use LLMs to generate the next step at each point, using the results of the previous step as its context, to avoid requiring the user to explicitly give every step and select the order of execution.

That can be accomplished by the LangChain framework. It sets up a series of cues to get the intended outcome. It gives developers an easy-to-use interface through which to communicate with LLMs. In this sense, LangChain functions similarly to a reductionist wrapper for utilising LLMs.

LangChain Expression Language: What Is It?

A declarative language called LangChain Expression Language (LCEL) makes it simple for developers to join chains. It was designed from the ground up to make it easier to put prototypes into production without changing the code.

Some advantages of LCEL are as follows:

You receive the best possible time-to-first-token (the duration of time it takes for the first piece of output to emerge) when you utilise LCEL to generate your chains. This means that, for some chains, we stream tokens straight from an LLM to a streaming output parser, and you receive incremental, parsed output chunks back at the same rate as the LLM provider.

Any chain created with LCEL can be invoked via the asynchronous API (like a LangServe server) or the synchronous API (like in an experimentation Jupyter notebook). This gives great speed and flexibility to handle several concurrent requests on the same server when using the same code for prototypes and production.

It is possible for a data scientist or practitioner to conduct LCEL chain steps concurrently.Whatever chain created using LCEL can be swiftly deployed by LangServe.

Why would you want to use LangChain?

Even when used with only one prompt, LLMs are already very powerful. But by supposing the most likely word to come, they effectively carry out completions. They don't pause to consider their actions or their responses the way humans do. That's what we would like to think, anyway.

The process of drawing new conclusions from data obtained before the communication act is known as reasoning. We view the process of making an axolotl sculpture as a series of little actions that influence the larger ones, rather than as a single, uninterrupted activity.

With the LangChain framework, programmers may design agents that can deconstruct larger tasks into smaller ones and reason about them. With LangChain, you may use intermediate stages to give context and memory to completions by chaining together complex instructions.

Why is the industry so enthralled with LangChain?

The intriguing thing about LangChain is that it enables teams to add context and memory to already-existing LLMs. They are able to perform increasingly difficult tasks with increased accuracy and precision by artificially adding "reasoning."

Because LangChain offers an alternative to dragging and dropping pieces or using code to create user interfaces, developers are enthused about this platform. Users may just ask for what they want.

How does LangChain function?

Hugging Face, GPT3, Jurassic-1 Jumbo, and other language models are only a few of the many language models that LangChain supports. It was written in Python and JavaScript.

It is necessary to first establish a language model in order to use LangChain. This entails building your own model or using an openly accessible language model like GPT3.

After finishing, you can use LangChain to create applications. A variety of tools and APIs provided by LangChain make it easy to connect language models to outside data sources, engage with their environment, and create complex applications.

It does this by connecting a series of elements known as links to form a process. Every link in the chain performs a certain function, such as:

- formatting of user-provided data

- Making use of a data source

- Making reference to a language model

- handling the output of the language model

A chain's links are joined sequentially, with each link's output acting as its subsequent link's input. Small operations can be chained together to perform larger, more complex ones.

What are LangChain's core building blocks?

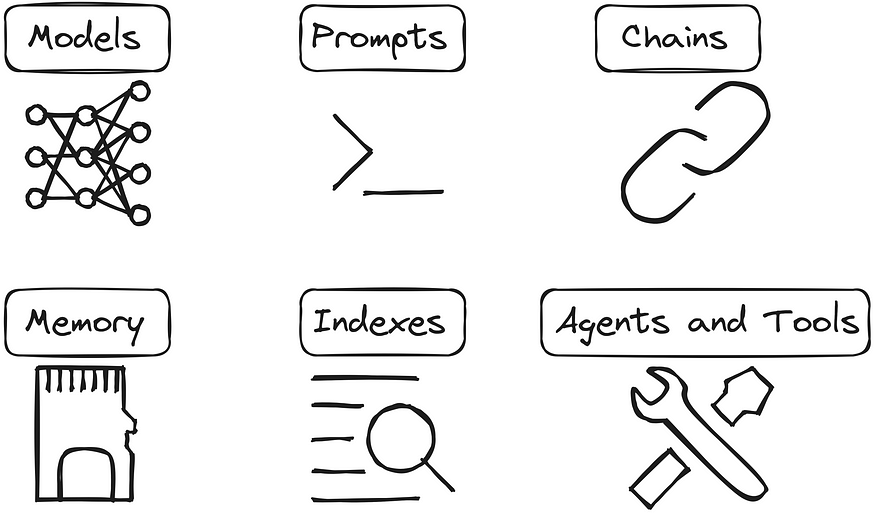

LLMs

Large language models (LLMs), which are trained on enormous text and code datasets, are naturally required by LangChain. Among other things, you can use them to create content, translate between languages, and respond to inquiries.

Prompt templates

To format user input so that the language model can understand it, prompt templates are utilised. They can be used to explain the task that the language model is supposed to perform or to set the scene for the user's input. For instance, a chatbot's prompt template may contain the user's name and query.

Indexes

Databases known as indexes include details on the LLM's training set. The text, connections, and information of the documents can all be included in this data.

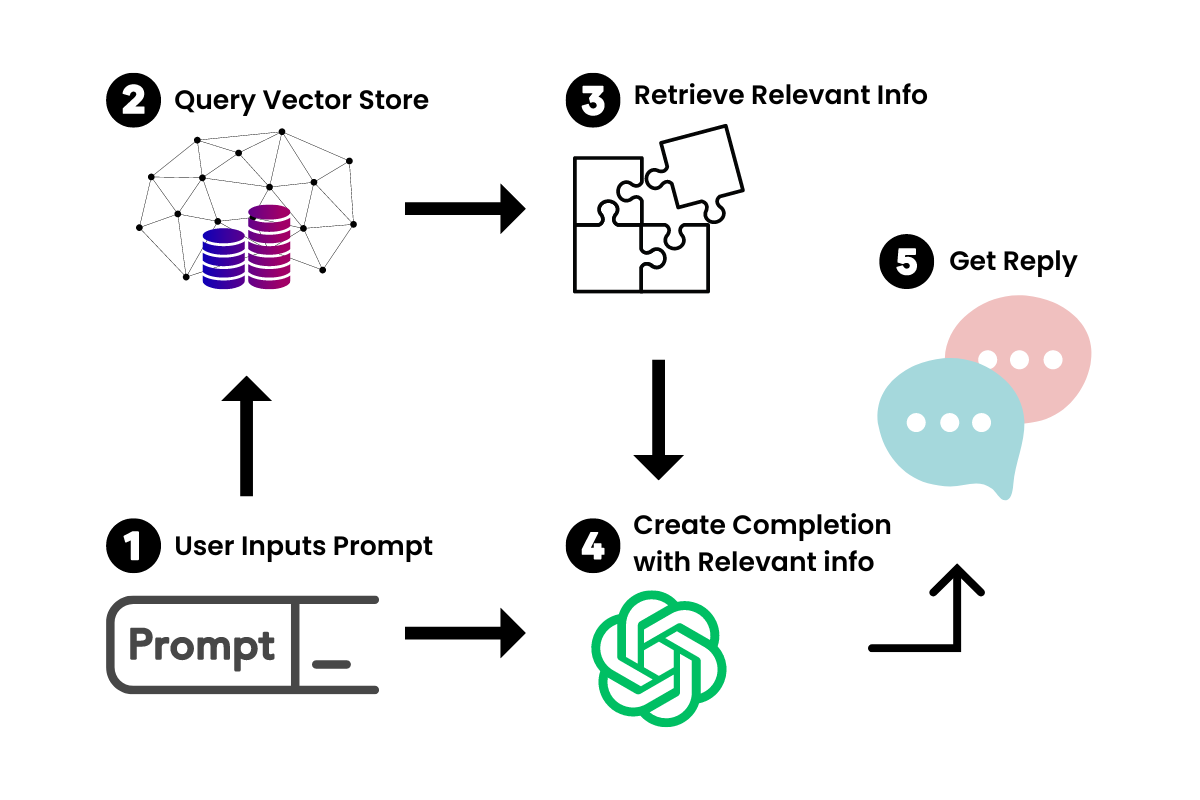

Retrievers

Algorithms known as retrievers search an index for particular information. They can be used to find documents most similar to a given file or documents pertinent to a user's query. Retrievers are essential for improving the accuracy and speed of the LLM's responses.

Output parsers

The formatting of the responses that LLM output parsers produce is their responsibility. They can add more information, change the response's structure, or remove any unwanted content. To make sure that the LLM's responses are easy to understand and implement, output parsers are essential.

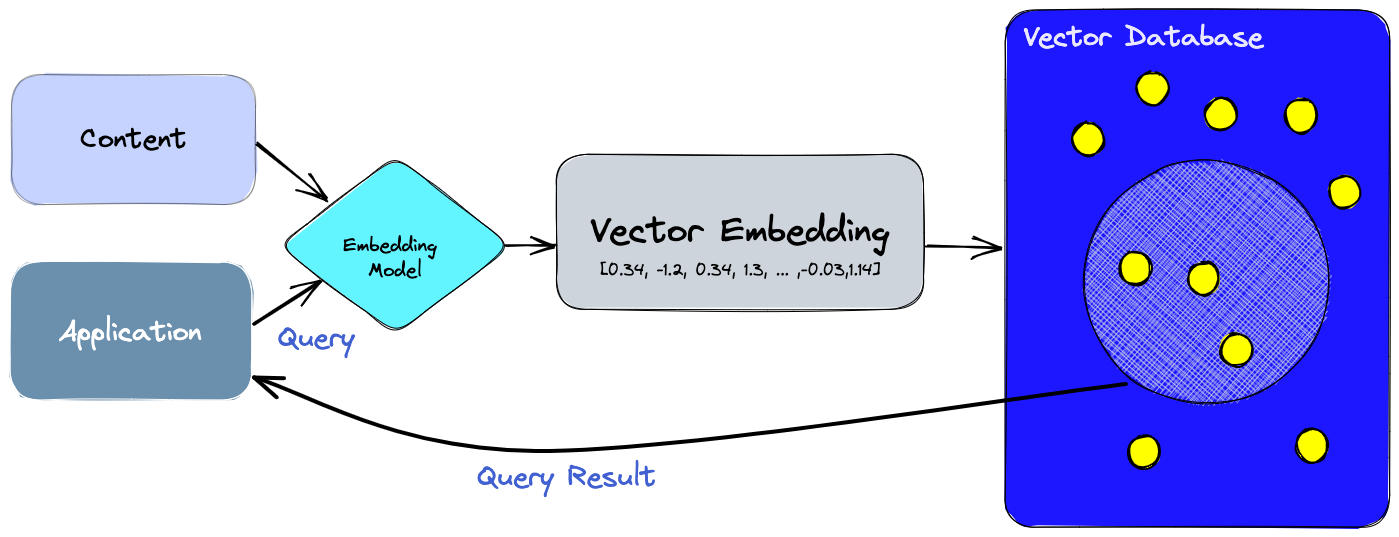

Vector Store

Word and phrase mathematical representations are kept in a vector storage. It is useful for duties such as summarising and responding to inquiries. For example, all words that are similar to the word "cat" can be found using a vector database.

Agents

Programs known as agents have the ability to break down large jobs into smaller, more manageable tasks. An agent can be used to control a chain's flow and choose which tasks to complete; for instance, it can determine if a user's question is better served by a human expert or a linguistic model.

Advantages of adopting LangChain:

Scalability: Applications built with LangChain can handle enormous amounts of data.

Adaptability: The framework's versatility enables the development of a broad range of applications, such as question-answering systems and chatbots.

Extensibility: The framework's expandability allows developers to incorporate their own features and functionalities.

Simple to use: LangChain provides a high-level API for integrating language models with a range of data sources and creating intricate apps.

Open source: LangChain is a freely available framework that can be used and altered.

Vibrant community: You may get help and assistance from a sizable and vibrant community of LangChain developers and users.

Excellent documentation: The documentation is clear and comprehensive.

Integrations: Flask and TensorFlow are only two examples of the libraries and frameworks with which LangChain can be integrated.

How to begin using LangChain?

The source code for LangChain may be seen on GitHub.It is available for download and installation on your computer.

LangChain can be easily installed on cloud platforms because it is also available as a Docker image.

It can also be installed using the straightforward Python pip command: install pip using langchain

Use the following command to install all of LangChain's integration requirements: pip install langchain[all]

You're now prepared to embark on a new endeavour!

In a newly created directory, execute the subsequent command: initial langchain The next step is to import the necessary modules and create a chain—a collection of links, each of which serves a specific purpose—by joining them together.

Create an instance of the Chain class, then add links to it to form a chain. This sample creates a chain that calls a language model and gets its answer: A chain is returned by Chain().add_link(Link(model="openai", prompt="Make a sculpture of an axolotl") Use the run() function on the chain object to start a chain. The result of the final link in a chain is its output. Use the get_output() function on the chain object to obtain the chain's output.

With LangChain, what kinds of apps can you create?

Condensed content creation:

For the purpose of constructing summarising systems that can generate summaries of blog posts, news stories, and other types of text, LangChain is useful. Content generators that produce engaging and useful text are another prominent use case.

Chatbots

Naturally, one of the best applications for LangChain is in chatbots or any other system that can answer queries. These systems will have the capacity to retrieve and handle data from various sources, including the internet, databases, and APIs. Chatbots are capable of answering questions, offering assistance to customers, and producing original material in the form of emails, letters, screenplays, poems, code, and more.

Data analysis software

Data analysis tools that help people comprehend the connections between different data pieces can also be made with data analysis software like LangChain.

Conclusion:

Currently, chat-based apps on top of LLMs (especially ChatGPT), sometimes known as "chat interfaces," are the main use case for LangChain. The company's CEO, Harrison Chase, stated in a recent interview that the best use case at the moment is a "chat over your documents." To enhance the conversation experience for apps, LangChain also offers further features like streaming, which entails delivering the LLM's output token by token as opposed to everything at once.

We conduct structured, instructor-led live workshops and training sessions on topics related to AI, ML, and Generative AI. We recently completed the LangChain series - introduction, building a LangChain app and deploying the app. We shall be organising more such sessions. To join, please visit https://nas.io/upskill-pro

navan.ai has a no-code platform - nstudio.navan.ai where users can build computer vision models within minutes without any coding. Developers can sign up for free on nstudio.navan.ai

Want to add Vision AI machine vision to your business? Reach us on https://navan.ai/contact-us for a free consultation.