Human emotion classification is the process of identifying and categorizing emotions in human expressions, human speech, or text. This can be done through various techniques, such as natural language processing, machine learning, and sentiment analysis.

The goal of emotion classification is to understand and interpret human emotions in order to improve communication, decision-making, and overall emotional intelligence. Common emotions that are classified include happiness, sadness, anger, fear, surprise, and neutral.

Human emotion classification has a wide range of potential uses:

1. Customer service: By classifying emotions in customer interactions, companies can improve their customer service by understanding and addressing customer needs and concerns in a timely and appropriate manner.

2. Marketing: By classifying emotions in customer feedback and social media posts, companies can understand how their products and services are perceived by consumers, and make adjustments to their marketing strategies accordingly.

3.Healthcare: By classifying emotions in patient interactions, healthcare providers can better understand and address patient concerns and needs, and improve patient outcomes.

4. Mental Health: By classifying emotions in therapy sessions, psychologists and mental health professionals can better understand the emotional states of their patients and provide more effective treatment.

5. Human-computer interaction: By classifying emotions in human-computer interactions, researchers can design more natural and intuitive human-computer interfaces, that adapt to the emotions of the user.

6. Robotics: By classifying emotions in human-robot interactions, researchers can design robots that can respond appropriately to human emotions, making them more natural and effective collaborators.

7. Education: By classifying emotions in students' responses to educational materials, teachers can better understand how students are responding to the material and make adjustments accordingly.

8. Law enforcement: By classifying emotions in police interrogations, law enforcement officials can better understand the emotional state of suspects and make more informed decisions.

Overall, human emotion classification can be used to improve communication, decision-making, and overall emotional intelligence in a wide range of fields.

There are several types of data that are important for human emotion classification, including

- Facial expressions and body language: This can include video recordings of people's faces and bodies as they express emotions. This data can be used to train models to classify emotions based on facial expressions and body language.

- Text data: This can include written transcripts of speech or written text, such as social media posts, emails, or customer reviews. This data can be used to train machine learning models to classify emotions based on the words used and the context in which they are used.

- Speech data: This can include audio recordings of speech, such as phone conversations or interviews. This data can be used to train models to classify emotions based on features such as pitch, intonation, and rhythm.

- Physiological data: This can include data from sensors that measure physiological responses, such as heart rate, skin conductance, and respiration. This data can be used to train models to classify emotions based on physiological responses.

- Metadata: This can include information such as the speaker's age, gender, ethnicity, and cultural background. This data can be used to train models to classify emotions based on demographic factors.

Case Study: Using Facial Expression Recognition in Retail Industry

A small case study of human emotion classification could involve using facial expression recognition to improve customer service in a retail store.

In this study, a retail store would use a camera system to capture images of customers' faces as they enter the store. A machine learning model would then be trained to recognize and classify different facial expressions such as happiness, sadness, anger, and neutrality. Once the model is trained it would then be applied to the live camera feed to classify the emotions of customers as they enter the store.

The store could use this data to identify patterns and trends in customer emotions, such as when customers are feeling happy or stressed. They could then use this information to improve their customer service by having sales associates approach customers who appear stressed or unhappy and offer assistance. Additionally, the store could use this data to adjust the store layout or product displays to create a more pleasant shopping experience.

As a result of this study, the retail store would be able to improve their customer service and increase customer satisfaction by using human emotion classification to understand the emotions of their customers. This case study shows how human emotion classification can be used in real-world scenarios to improve business practices and customer experience.

How navan.ai can help you build a facial emotion recognition/classification model without having to write a single line of code?

1. Visit nstudio.navan.ai, and sign up using your Gmail id.

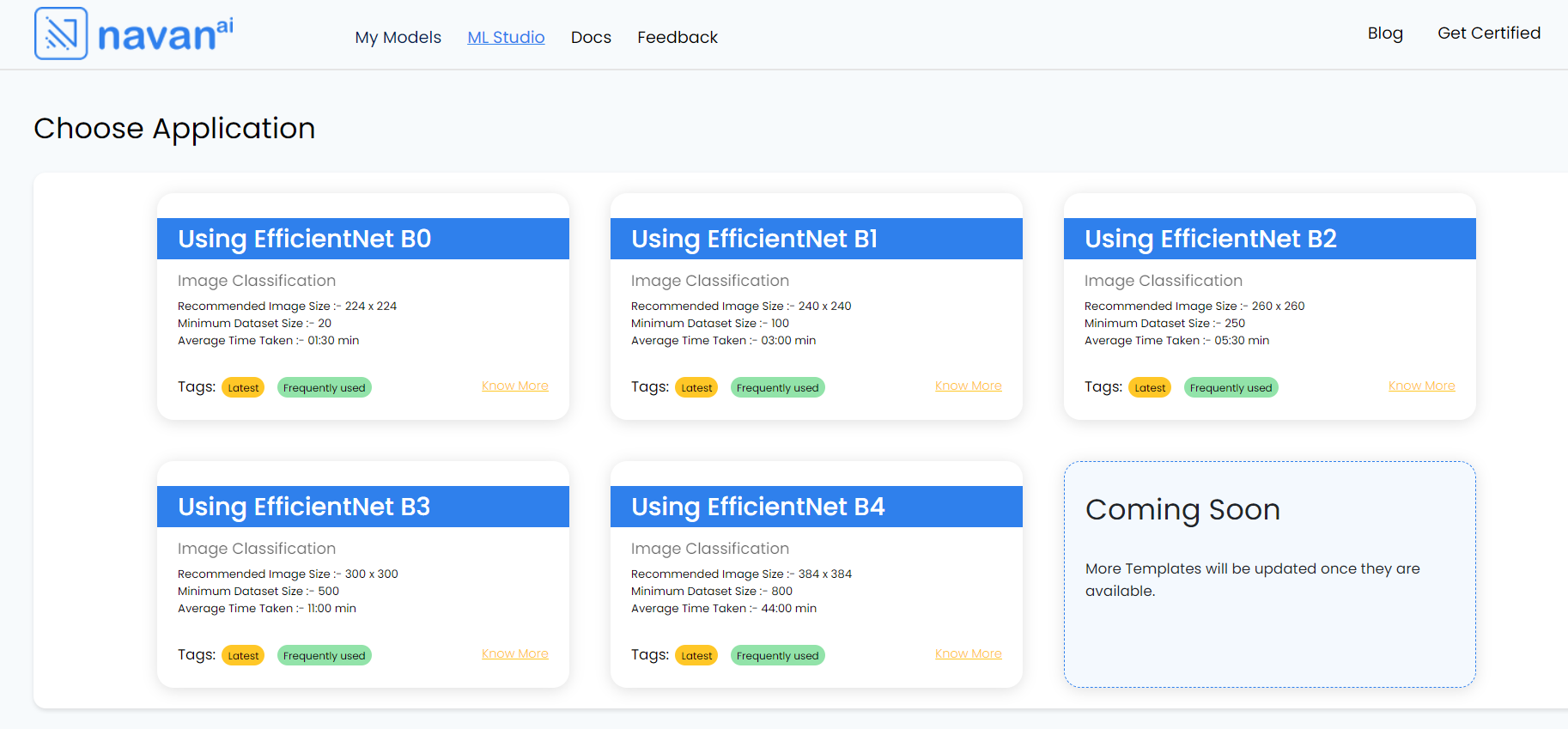

2. Choose a suitable model architecture: EfficientNet-B4 as a Convolutional Neural Network (CNN) architecture is made based on several factors such as accuracy, computation efficiency, and scalability.EfficientNet-B4 has a good trade-off between accuracy and computation efficiency and is capable of handling high-resolution images with larger model sizes and more parameters compared to smaller models. This makes it a suitable choice for our use case human face emotion classification application.

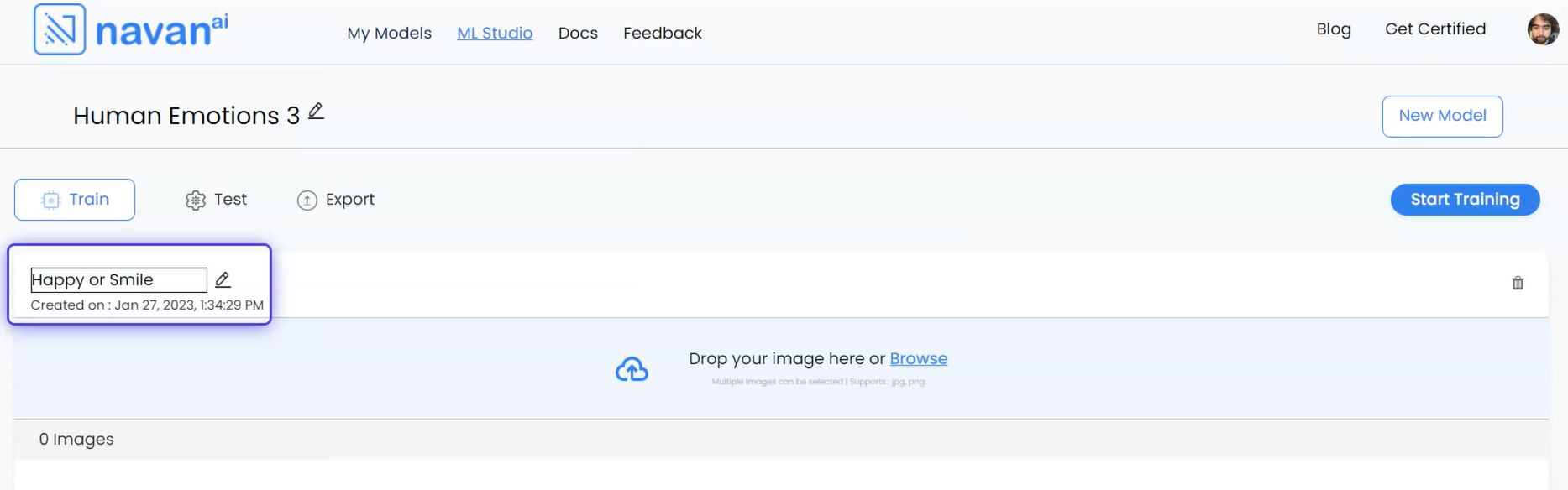

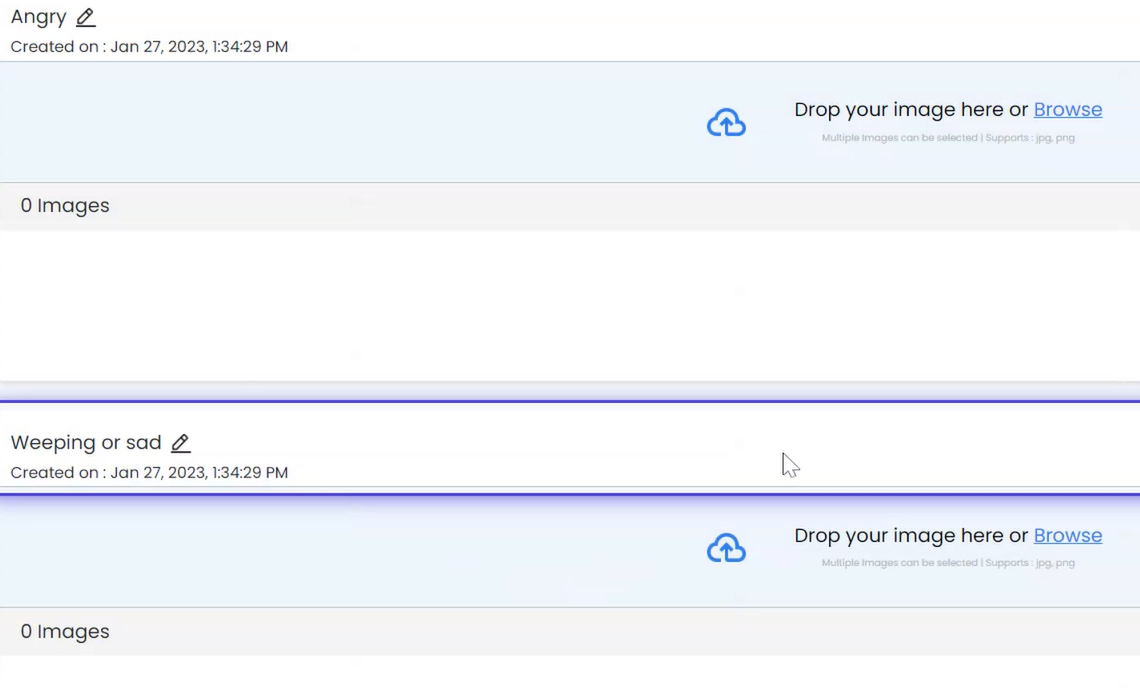

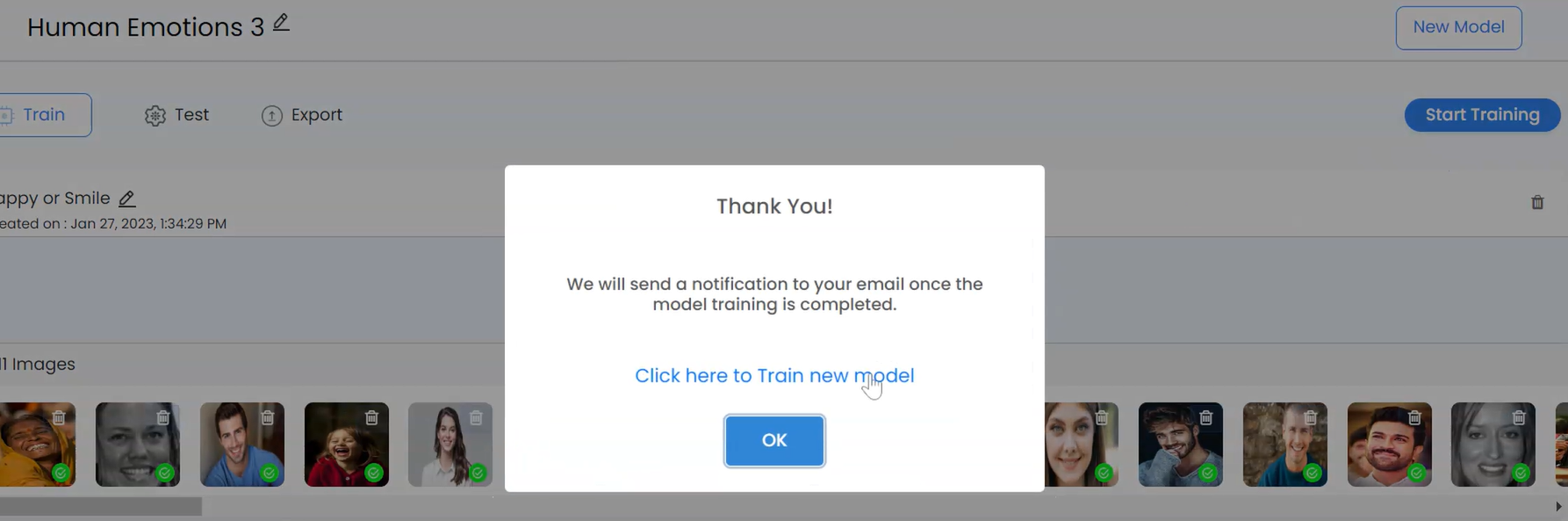

3. Training the model: We are considering 3 classes here, One [Happy or Smile], Two [Angry], and Three [Weeping or Sad] and we have named the Model “HUMAN EMOTIONS 3”. We create a class for each category. After we upload images into each class, the model is ready to be trained.

The First class Happy or Smile is created.

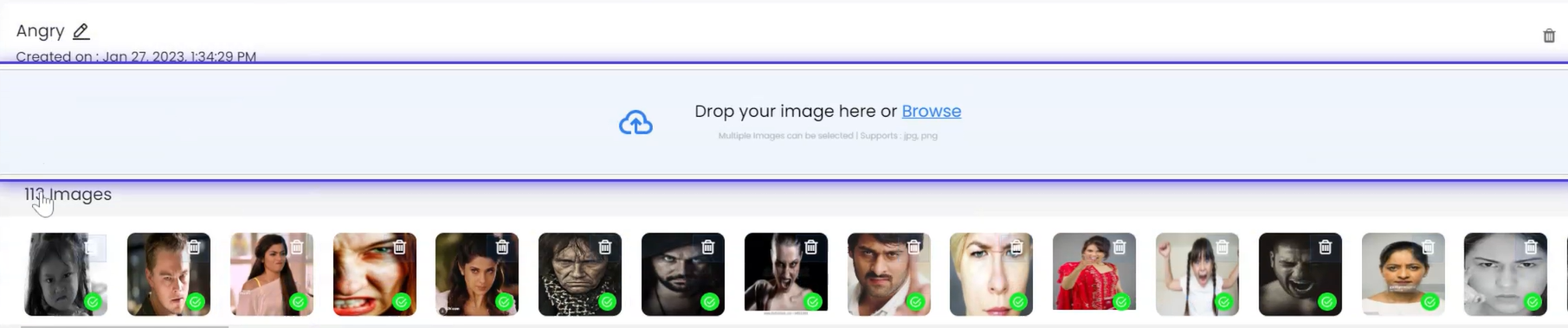

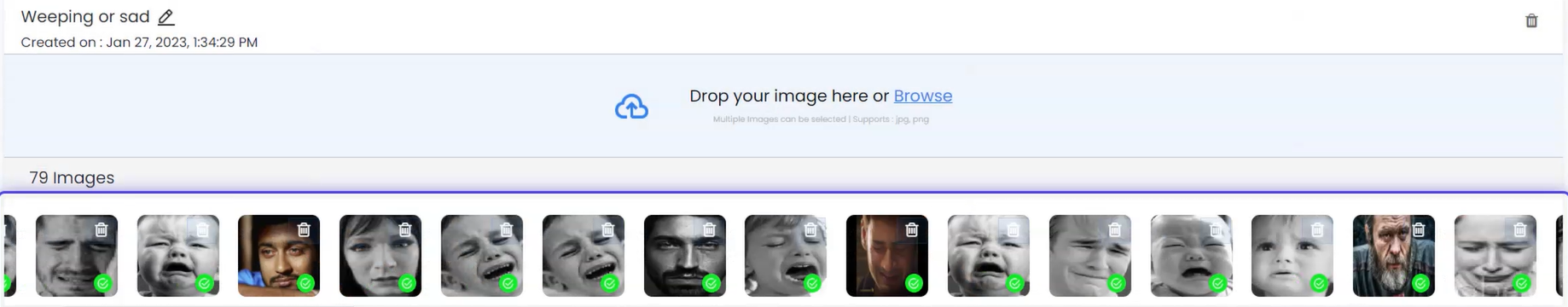

We created an Angry class and a Weeping or sad class here

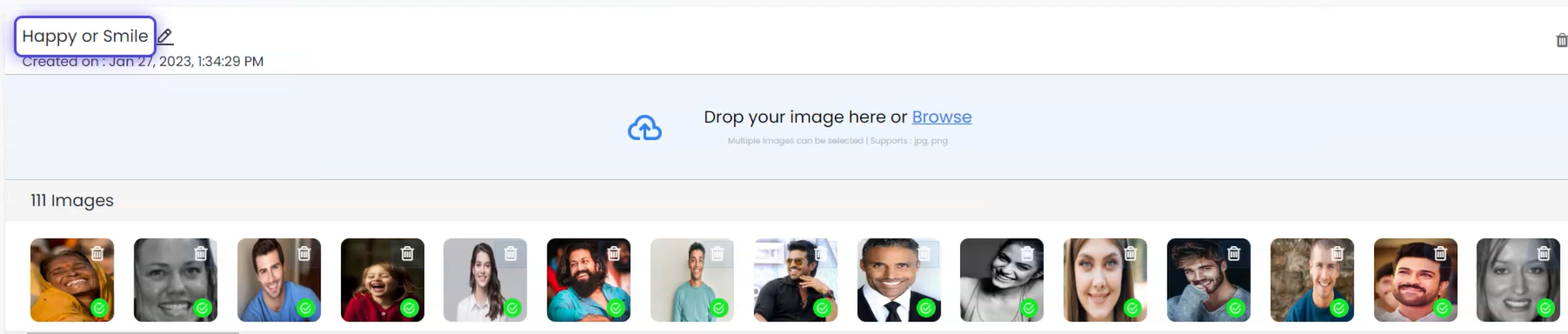

The images are uploaded to Class 1 [Happy or Smile]

The images are uploaded to Class 2 [Angry]

The images are uploaded to Class 3 [Weeping or Sad]

4. The next step is to click on the start training button so that the model can be trained.

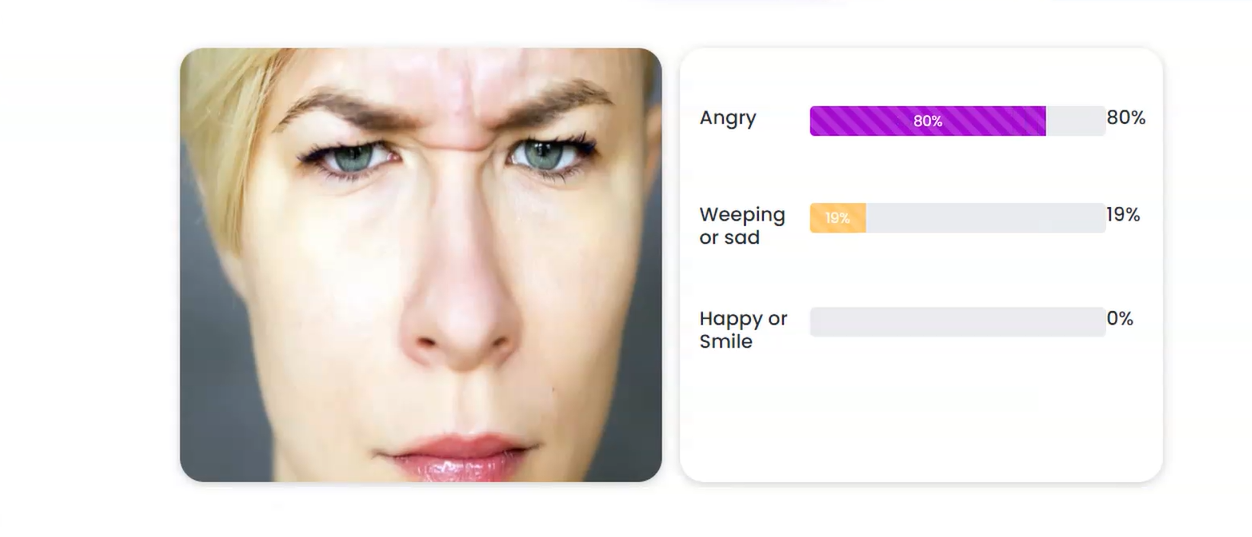

5. Testing the model: Once the HUMAN EMOTIONS 3 image classification model has been trained, the next step is to test the model to see if it is performing according to our expectations. It can be evaluated using a separate test dataset to determine its performance and make any necessary adjustments before deploying it in a real-world application.

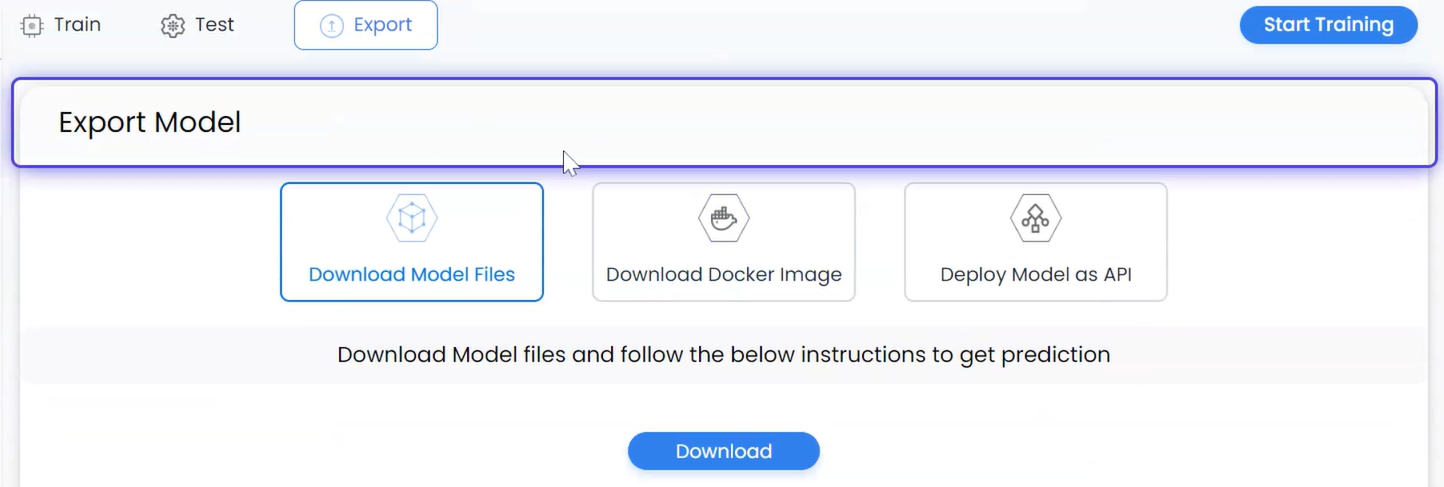

6. Deploying the model: The easiest way to deploy the computer vision model is by using the list of export models on navan.ai. We have 3 options: Deploy a model using Model files, Deploy a model through Docker, and lastly deploy the model as API. You can integrate the model with your application to get a scalable use case and build using your data without any coding on navan.ai.

Here’s a video showing how you can build a Facial Emotion Recognition Computer Vision AI Model on navan.ai:

navan.ai is a no-code computer vision platform that helps developers to build and deploy their computer vision models in minutes. Why invest 2 weeks in building a model from scratch when you can use navan.ai and save 85% of your time and cost in building and deploying a computer vision model? Build your models, share knowledge with the community and help us make computer vision accessible to all. navan.ai also helps organizations to set up CT, CI, and CD pipelines for ML applications.

Visit navan.ai and get started with your computer vision model development NOW!