Introduction:

Koala is a conversation chatbot that was just introduced by UC Berkeley. It is built in the ChatGPT manner, but it is much smaller and performs just as well. According to the research findings, Koala is frequently chosen over Alpaca and shows to be an effective tool for producing answers to a range of user concerns. Furthermore, Koala performs at least as well as ChatGPT in more than half of the instances. The findings demonstrate that when trained on carefully selected data, smaller models can attain virtually equal performance to larger models.

Instead of just growing the size of the current systems, the team is urging the community to concentrate on selecting high-quality datasets to build smaller, safer, and more effective models.

They add that because Koala is still a research prototype and has limits with regard to dependability, safety, and content, it should not be used for commercial purposes.

What is Koala?

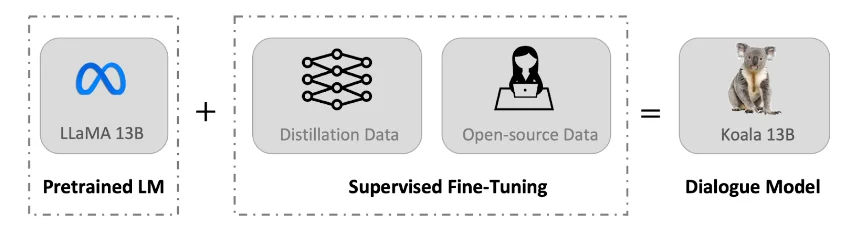

Koala is a new model that was refined using publicly available interaction data that was scraped from the internet. It was especially focused on data that included interaction with very powerful closed-source models like ChatGPT. Fine-tuning the LLaMA base model involves using conversation data collected from public datasets and the web, which includes high-quality user query responses from other big language models, question answering datasets, and human feedback datasets. Based on human evaluation on real-world user prompts, the resulting model, Koala-13B, demonstrates competitive performance when compared to previous models. According to the essay, learning from superior datasets can help smaller models overcome some of their weaknesses and eventually even surpass the power of large, closed-source models.

Koala Architecture:

Koala is a chatbot that Meta's LLaMA was tuned using dialogue data collected from the internet. We also give the findings of a user research that contrasts our model with ChatGPT and Stanford's Alpaca. We further explain the dataset curation and training method of our model. According to our findings, Koala can proficiently address a wide range of user inquiries, producing outcomes that are frequently superior to those of Alpaca and, in more than half of the instances, at least equal to those of ChatGPT.

Specifically, it implies that, when trained on carefully selected data, models small enough to be executed locally can mimic a significant portion of the performance of their larger models. This may mean, for instance, that rather than just growing the scale of current systems, the community should work harder to curate high-quality datasets, as this might enable safer, more realistic, and more competent models. We stress that Koala is currently a research prototype and should not be used for anything other than research. Although we hope that its release will serve as a useful community resource, it still has significant issues with stability, safety, and content.

Koala Overview:

Large language models (LLMs) have made it possible for chatbots and virtual assistants to become more and more sophisticated. Examples of these systems are ChatGPT, Bard, Bing Chat, and Claude, which can all produce poetry and answer to a variety of user inquiries in addition to offering sample code. To train, many of the most powerful LLMs need massive amounts of computer power and frequently make use of proprietary datasets that are vast in size. This implies that in the future, a small number of companies will control a big portion of the highly capable LLMs, and that both users and researchers will have to pay to interact with these models without having direct control over how they are changed and enhanced.

Koala offers yet another piece of evidence in support of this argument. Koala is optimised using publicly accessible interaction data that is scraped from the internet, with a particular emphasis on data involving interactions with extremely powerful closed-source models like ChatGPT. These include question answering and human feedback datasets, as well as high-quality user query responses from other big language models. Human evaluation on real-world user prompts suggests that the resulting model, Koala-13B, performs competitively with previous models.

The findings imply that learning from superior datasets can somewhat offset the drawbacks of smaller models and, in the future, may even be able to match the power of huge, closed-source models. This may mean, for instance, that rather than just growing the scale of current systems, the community should work harder to curate high-quality datasets, as this might enable safer, more realistic, and more competent models.

Datasets and Training:

Sifting through training data is one of the main challenges in developing dialogue models. Well-known chat models such as ChatGPT, Bard, Bing Chat, and Claude rely on proprietary datasets that have been heavily annotated by humans. We collected conversation data from public databases and the web to create our training set, which we then used to build Koala. A portion of this data consists of user-posted online conversations with massive language models (e.g., ChatGPT).

Instead concentrating on gathering a large amount of web data through scraping, we concentrate on gathering a small but high-quality dataset. For question responding, we leverage public datasets, human input (positive and negative ratings on responses), and conversations with language models that already exist. Below, we offer the specifics of the dataset composition.

Limitations and Challenges

Koala has limitations, just like other language models, and when used improperly, it can be dangerous. We note that, probably as a consequence of the dialogue fine-tuning, Koala can experience hallucinations and produce erroneous responses in a very confident tone. This may have the regrettable consequence of implying that smaller models acquire the bigger language models' assured style before they acquire the same degree of factuality; if this is the case, this is a constraint that needs to be investigated in more detail in subsequent research. When utilised improperly, Koala's hallucinogenic responses may aid in the dissemination of false information, spam, and other materials.

1. Traits and Prejudices:

Due to the biases present in the discourse data used for training, our model may contribute to negative preconceptions, discrimination, and other negative outcomes.

2. Absence of Common Sense:

Although large language models are capable of producing seemingly intelligible and grammatically correct text, they frequently lack common sense information that we take for granted as humans. This may result in improper or absurd responses.

3. Restricted Knowledge:

Large language models may find it difficult to comprehend the subtleties and context of a conversation. Additionally, they might not be able to recognize irony or sarcasm, which could result in miscommunication.

Future Projects with Koala

It is our aim that the Koala model will prove to be a valuable platform for further academic study on large language models. It is small enough to be used with modest compute power, yet capable enough to demonstrate many of the features we associate with contemporary LLMs. Some potentially fruitful directions to consider are:

1. Alignment and safety:

Koala enables improved alignment with human intents and additional research on language model safety.

2. Bias in models:

We can now comprehend large language model biases, misleading correlations, quality problems in dialogue datasets, and strategies to reduce these biases thanks to Koala.

3. Comprehending extensive language models:

Koala inference makes (formerly black-box) language models more interpretable by allowing us to better examine and comprehend the internal workings of conversational language models on comparatively cheap commodity GPUs.

Conclusion:

Small language models can be trained faster and with less computational power than bigger models, as demonstrated by Koala's findings. For academics and developers who might not have access to high-performance computing resources, this makes them more accessible.

navan.ai has a no-code platform - nstudio.navan.ai where users can build computer vision models within minutes without any coding. Developers can sign up for free on nstudio.navan.ai

Want to add Vision AI machine vision to your business? Reach us on https://navan.ai/contact-us for a free consultation.