Introduction

The study and processing of digital images is the main emphasis of the computer science and engineering discipline of image processing. It entails the enhancement, transformation, or extraction of information from images through the use of algorithms and procedures. Applications for image processing can be found in many fields, including entertainment, remote sensing, medical, and surveillance.

Common tasks and techniques in image processing:

1. Image Enhancement: To enhance an image's appearance or quality by techniques like colour correction, noise reduction, contrast improvement, and sharpening.

2. Image Restoration: To restore deformed or deteriorated images due to noise, blurring, or other causes; this includes applying methods like denoising and deblurring.

3. Feature Extraction: To recognize and extract useful features from pictures that may be applied to classification, object detection, and pattern recognition applications.

4. Object Detection and Recognition: To discover and identify things of interest in an image, as well as to determine the class or category of those objects. Template matching, object tracking, and machine learning-based approaches like convolutional neural networks (CNNs) are some of the techniques used in this.

5. Image Compression: Reducing an image's size to minimise loss of quality while saving bandwidth or storage space. Commonly used compression methods are GIF, PNG, and JPEG.

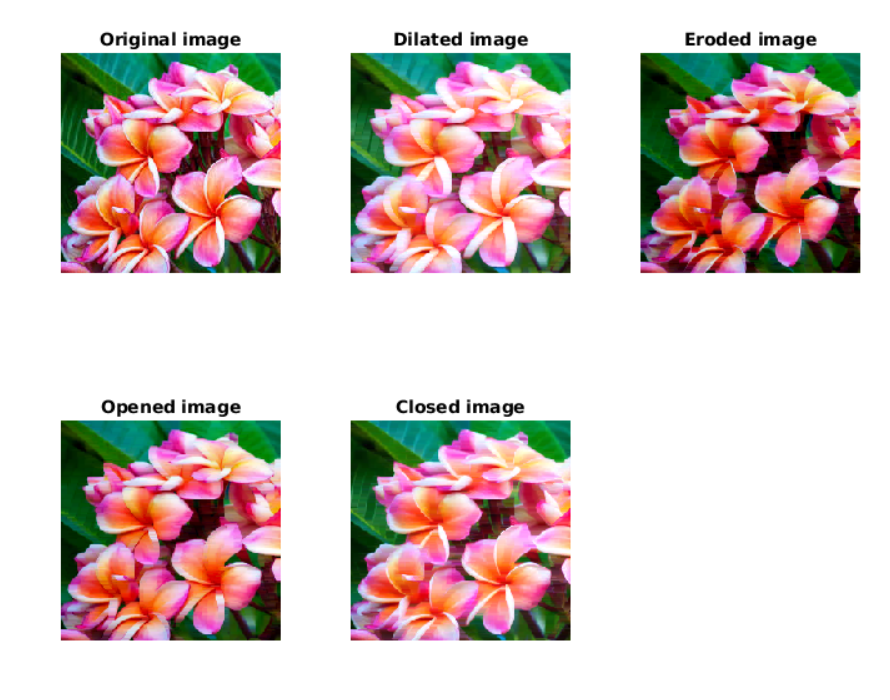

6.Morphological Processing: Operations including dilatation, erosion, opening, and closure that are dependent on the form and structure of objects inside an image.

7.Geometric Transformation: Altering an image's rotation, scale, translation, and warping, among other spatial features. For tasks like geometric rectification and picture registration, geometric transformations are helpful.

8. Image Registration: Aligning several photos of the same subject or item in order to make comparisons or fusions easier. Applications involving computer vision, remote sensing, and medical imaging all benefit from this.

9. Image Analysis and Pattern Recognition: Analysing photos to find patterns and important information; examples include interpreting scenes, object counting, and texture analysis.

10. Image Segmentation: Dividing an image into foreground and background regions based on a specified threshold value.Grouping pixels into clusters based on similarity in color, intensity, or texture using algorithms like k-means clustering or mean shift clustering.

Classic image processing algorithms:

1. Morphological Image Processing

It’s a technique used in digital image processing and computer vision to analyse and manipulate the shape and structure of objects within an image. It is based on mathematical morphology, which deals with the study of shapes, structures, and patterns.

The fundamental operations in morphological image processing are dilation and erosion, which involve modifying the shape of objects in an image based on the shape of a structuring element (also known as a kernel or mask).

Dilation: Dilation is the process of making an image's object boundaries larger. It is performed by sliding the structuring element over the image and setting the pixel value at the centre of the structuring element to the maximum value found in the neighbourhood covered by the element.

Erosion: Erosion involves shrinking the boundaries of objects in an image.It is performed by sliding the structuring element over the image and setting the pixel value at the centre of the structuring element to the minimum value found in the neighbourhood covered by the element.

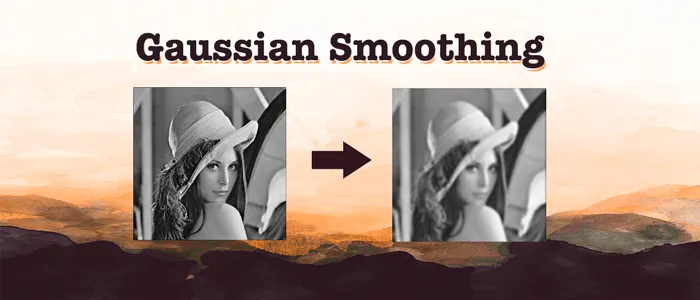

2. Gaussian Image Processing

It refers to a technique used in digital image processing to apply a Gaussian filter or kernel to an image. The Gaussian filter is a type of linear filter that is commonly used for tasks such as smoothing or blurring an image.It is named after the Gaussian distribution (also known as the normal distribution), which is characterised by its bell-shaped curve. It is particularly effective for reducing noise and smoothing an image while preserving important image features. It achieves this by averaging the intensity values of neighbouring pixels, with more emphasis on pixels closer to the centre of the kernel.

The Gaussian filter is defined by two parameters:

Standard Deviation (σ): This parameter determines the spread or width of the Gaussian distribution. A larger standard deviation results in a wider and smoother blur, while a smaller standard deviation produces a narrower blur.

Size of the Kernel: This refers to the dimensions of the Gaussian kernel matrix. The size of the kernel determines the extent of the neighbourhood used for smoothing. A larger kernel size results in a stronger blur effect but may also lead to loss of fine details.

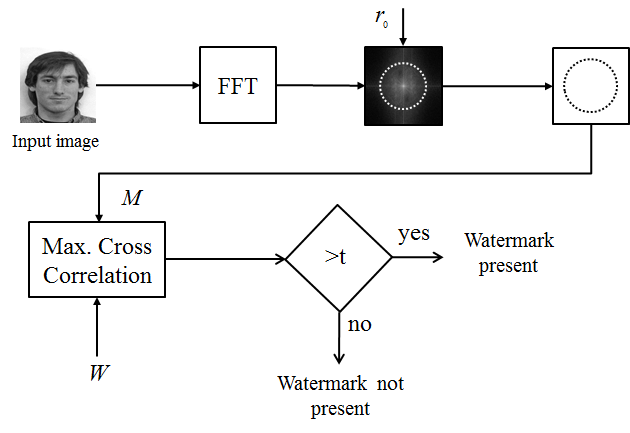

3. Fourier Transform in image processing

It is a powerful mathematical tool used in image processing to analyse the frequency content of images. It decomposes an image into its constituent spatial frequencies, revealing information about patterns, textures, edges, and other structures present in the image.

There are two main types of Fourier Transforms used in image processing:

Continuous Fourier Transform (CFT): In the context of image processing, continuous Fourier Transform is rarely used directly because digital images are discrete in nature. However, it forms the theoretical basis for the Discrete Fourier Transform (DFT).

Discrete Fourier Transform (DFT): DFT is the primary form of Fourier Transform used in image processing. It operates on digital images represented as arrays of discrete pixel values. DFT converts spatial domain information (pixel intensity values) into frequency domain information (magnitude and phase of spatial frequencies).

4. Edge Detection in image processing

It is a fundamental technique in image processing used to identify and locate boundaries within an image.Edges represent significant changes in pixel intensity and often correspond to object boundaries, surface discontinuities, or other meaningful features in the image. Detecting edges is crucial for tasks such as object recognition, image segmentation, and feature extraction

Gradient-based methods:

Sobel Operator: The Sobel operator is a popular edge detection filter that computes the gradient magnitude of the image. It convolves the image with a pair of 3x3 kernels to approximate the gradient in the horizontal and vertical directions.

Prewitt Operator: Similar to the Sobel operator, the Prewitt operator calculates image gradients using a pair of 3x3 convolution kernels.

Scharr Operator: An alternative to the Sobel and Prewitt operators, the Scharr operator provides a more isotropic response to diagonal edges.

Gradient Magnitude and Direction: Edge pixels can be detected by thresholding the magnitude of the gradient or by considering both gradient magnitude and direction.

Laplacian of Gaussian (LoG): The LoG operator involves convolving the image with a Gaussian kernel followed by the Laplacian operator. This method enhances edges while reducing noise by first smoothing the image with Gaussian blur.

Canny Edge Detector: The Canny edge detector is a multi-stage algorithm that provides excellent edge detection performance. It includes:

Gaussian Blur: Smoothing the image with a Gaussian filter to reduce noise and remove small details.

Gradient Calculation: Computing the gradient magnitude and direction.

Non-maximum Suppression: Suppressing non-maximum pixels to thin the edges.

Double Thresholding: Applying high and low thresholds to identify potential and weak edges.

Edge Tracking by Hysteresis: Connecting weak edges to strong edges to form continuous edge contours.

Zero Crossing Edge Detection: This method involves finding zero crossings in the second derivative of the image. It can be implemented using the Laplacian operator or by detecting changes in the sign of the gradient.

Morphological Edge Detection: Morphological operations like dilation and erosion can be used to detect edges by enhancing or suppressing regions of the image based on their local structure.

5. Wavelet Image Processing

Wavelet image processing refers to a set of techniques that utilise wavelet transforms to analyse and manipulate digital images. Wavelets are mathematical functions that are localised in both frequency and space domains, making them well-suited for representing and processing signals with localised features, such as images.

There are several types of wavelet transforms used in image processing, including:

Continuous Wavelet Transform (CWT): The CWT decomposes an image into wavelet coefficients at continuously varying scales and positions. It provides high flexibility but can be computationally expensive.

Discrete Wavelet Transform (DWT): The DWT decomposes an image into wavelet coefficients at discrete scales and positions. It is computationally more efficient than the CWT and is widely used in practical applications.

6. Image processing using Neural Networks

Image processing using neural networks involves leveraging deep learning models to perform various tasks such as image classification, object detection, segmentation, enhancement, and generation. Convolutional Neural Networks (CNNs) are the most commonly used type of neural network architecture for image processing tasks due to their ability to effectively capture spatial hierarchies and learn meaningful representations directly from raw pixel data.

Image processing tools

1. OpenCV

OpenCV (Open Source Computer Vision Library) is an open-source computer vision and machine learning software library. It provides a comprehensive set of tools and functions for various image processing and computer vision tasks, making it a popular choice for researchers, developers, and practitioners working in fields such as robotics, augmented reality, facial recognition, and autonomous vehicles.

2. Scikit-image

Scikit-image is an open-source Python library designed for image processing tasks. It provides a comprehensive collection of algorithms and functions for image manipulation, analysis, and computer vision. scikit-image is built on top of NumPy, SciPy, and matplotlib, making it easy to integrate with other scientific computing libraries in the Python ecosystem.

3. PIL/pillow

PIL (Python Imaging Library) is a library for opening, manipulating, and saving many different image file formats. It was originally created by Fredrik Lundh and later maintained by the Python Imaging Library (PIL) community. However, development of PIL ceased around 2011, and the library became largely dormant.

Pillow is a modern fork of PIL that is actively developed and maintained. It was created to continue the development of the original PIL library and to provide support for Python 3.x versions, as well as to add new features and improvements. Pillow is designed to be compatible with PIL, making it a drop-in replacement for most applications.

4. NumPy

NumPy is a powerful Python library used for numerical computing. It provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently. NumPy is a fundamental package for scientific computing with Python and is widely used in fields such as machine learning, data analysis, and scientific research.

5. Mahotas

Mahotas is a computer vision and image processing library for Python, designed to work with NumPy arrays. It provides a wide range of algorithms for tasks such as image segmentation, feature extraction, object detection, texture analysis, and more. Mahotas is particularly useful for analysing and processing images in scientific research, computer vision applications, and machine learning projects.

Conclusion:

So we saw classical image processing that can be done using Morphological filtering, Gaussian filter, Fourier transform and Wavelet transform. All these can be performed using various image processing libraries like OpenCV, Mahotas, PIL, scikit-learn. navan.ai has a no-code platform - nstudio.navan.ai where users can build computer vision models within minutes without any coding. Developers can sign up for free on nstudio.navan.ai

Want to add Vision AI machine vision to your business? Reach us on https://navan.ai/contact-us for a free consultation.