Introduction:

In 2014, Ian Goodfellow and associates developed Generative Adversarial Networks, or GANs. In essence, GAN is a generative modelling technique that creates new data sets that resemble training data based on the training data. The two neural networks that make up a GAN's main blocks compete with one another to collect, replicate, and interpret dataset changes.

GAN, let's divide it into three distinct sections:

Learn about generative models, which explain how data is produced using probabilistic models. Put simply, it describes the visual generation of data.

Adversarial: An adversarial environment is used to train the model.

Deep neural networks are used in networks for training. When given random input, which is usually noise, the generator network creates samples—such as text, music, or images—that closely resemble the training data it was trained on. Producing samples that are indistinguishable from actual data is the generator's aim.

In contrast, the discriminator network attempts to differentiate between created and actual samples. Real samples from the training set and produced samples from the generator are used to teach it. The goal of the discriminator is to accurately identify created data as phony and real data as real.

The discriminator and generator engage in an aggressive game during the training process. The discriminator seeks to enhance its capacity to discern between genuine and produced data, while the generator attempts to generate samples that deceive it. Both networks are gradually forced to get better by this adversarial training.

The generator becomes better at creating realistic samples as training goes on, while the discriminator gets better at telling genuine data from produced data. This approach should ideally converge to a point where the generator can produce high-quality samples that are challenging for the discriminator to discern from actual data.

Impressive outcomes have been shown by GANs in a number of fields, including text generation, picture synthesis, and even video generation.They have been applied to many applications such as deepfakes, realistic image generation, low-resolution image enhancement, and more. The generative modelling discipline has benefited immensely from the introduction of GANs, which have also created new avenues for innovative artificial intelligence applications.

Why Were GANs Designed?

By introducing some noise into the data, machine learning algorithms and neural networks can be readily tricked into misclassifying objects. The likelihood of misclassifying the photos increases with the addition of noise. Thus, there is a slight question as to whether anything can be implemented so that neural networks can begin to visualise novel patterns, such as sample train data. As a result, GANs were developed to produce fresh, phoney results that resemble the original.

What are the workings of a generative adversarial network?

The Generator and Discriminator are the two main parts of GANs. The generator's job is to create fake samples based on the original sample, much like a thief, and trick the discriminator into believing the fake to be real. A discriminator, on the other hand, functions similarly to a police officer in that their job is to recognize anomalies in the samples that the generator creates and categorise them as genuine or fake. The two components compete against one other until they reach a point of perfection at which the Generator defeats the Discriminator by using fictitious data.

Discriminator

Because it's a supervised approach, This basic classifier forecasts whether the data is true or fraudulent. It gives a generator feedback after being trained on actual data.

Generator

It's an approach to unsupervised learning. Based on original (actual) data, it will produce phoney data. In addition, it is a neural network with activation, loss, and hidden layers. Its goal is to deceive the discriminator into believing it cannot recognize a phoney image by creating a fake image based on feedback. And the training ends when the generator fools the discriminator, at which point we may declare that a generalised GAN model has been developed.

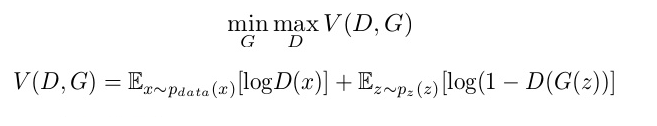

Here, the data distribution is captured by the generative model, which is then trained to produce a new sample that attempts to maximise the likelihood that the discriminator would err (maximise discriminator loss). The discriminator, on the other hand, is built using a model that attempts to minimise the GAN accuracy by estimating the likelihood that the sample it gets is from training data rather than the generator. As a result, the GAN network is designed as a minimax game in which the generator seeks to maximise the Discriminator loss while the discriminator seeks to minimise its reward, V(D, G).

Step 1: Identify the issue

Determining your challenge is the first step towards creating a problem statement, which is essential to the project's success. Since GANs operate on a distinct set of issues, you must provide The song, poem, text, or image that you are producing is a particular kind of issue.

Step 2: Choose the GAN's Architecture

There are numerous varieties of GANs, which we will continue to research. The kind of GAN architecture we're employing needs to be specified.

Step 3: Use a Real Dataset to Train the Discriminator

Discriminator has now been trained on an actual dataset. It solely has a forward path; the discriminator is trained in n epochs without any backpropagation. Additionally, the data you are giving is noise-free and only includes real photos. The discriminator uses instances produced by the generator as negative output to identify false images. What takes place now during discriminator training.

It categorises authentic and fraudulent data. When it mis-classifies something as real when it is false, or vice versa, the discriminator penalises it and helps it perform better. Through discriminator loss, the discriminator's weights are updated.

Step 4: Train Generator

Give the generator some fictitious inputs (noise), and it will utilize some arbitrary noise to produce some fictitious outputs. Discriminator is idle when Generator is trained, and Generator is idle when Discriminator is trained. The generator attempts to convert any random noise it receives as input during training into useful data. It takes time and operates across several epochs for the generator to produce meaningful output. The following is a list of steps to train a generator.

obtain random noise, generate a generator output on the noise sample, and determine whether the discriminator's generator output is authentic or fraudulent. We figure out the discriminator loss. To compute gradients, backpropagate via the discriminator and generator. To update generator weights, use gradients.

Step 5: Train a Discriminator on False Data

The samples that the generator creates are delivered to the discriminator, which determines whether the data it receives is real or fake and then feeds back to the generator.

Step 6: Train Generator using the Discriminator's output

Once more, Generator will receive training based on Discriminator's input in an effort to enhance performance.

This is an iterative procedure that keeps going until the Generator is unable to mislead the discriminator.

Loss Function of Generative Adversarial Networks (GANs)

I hope you can now fully understand how the GAN network operates. Let's now examine the loss function it employs and how it minimises and maximises during this iterative process. The following loss function is what the discriminator seeks to maximise, and the generator seeks to decrease it. If you have ever played a minimax game, it is the same.

The discriminator's assessment of the likelihood that actual data instance x is real is given by D(x).

Ex represents the expected value over all occurrences of real data.

The generator's output, G(z), is determined by the noise, z.

The discriminator's estimate of the likelihood that a fictitious occurrence is genuine is D(G(z)).

The expected value (Ez) is the sum of all random inputs to the generator (i.e., the anticipated value of all false instances generated, G(z)).

Obstacles that Generative Adversarial Networks (GANs) Face:

The stability issue that exists between the discriminator and generator. We prefer to be liberal when it comes to discrimination; we do not want it to be overly strict.

Determining the position of things is an issue. Let's say we have three horses in the photo, and the generator has produced six eyeballs and one horse.

Similar to the perspective issue, GANs struggle to comprehend global things because they are unable to comprehend holistic or global structures. This means that occasionally an unrealistic and impossibly difficult image is produced by GAN.

Understanding perspective is a challenge since current GANs can only process one-dimensional images, thus even if we train it on these kinds of photos, it won't be able to produce three-dimensional images.

Various Generative Adversarial Network (GAN) Types

1. DC GAN stands for Deep Convolutional Neural Network. It is among the most popular, effective, and potent varieties of GAN architecture. Instead of using a multi-layered perceptron, ConvNets are used in its implementation. Convolutional strides are used in the construction of the ConvNets, which lack max pooling and have partially linked layers.

2. Conditional GAN and Unconditional GAN (CGAN): A deep learning neural network with a few more parameters is called a conditional GAN. Additionally, labels are added to the discriminator's inputs to aid in accurate classification of the data and prevent the generator from filling them up too quickly.

3. Least Square GAN (LSGAN): This kind of GAN uses the discriminator's least-square loss function. The Pearson divergence can be minimized by minimizing the LSGAN objective function.

4. Auxilary Classifier GAN (ACGAN): This is an advanced form of CGAN that is identical to it. It states that in addition to determining whether an image is real or phony, the discriminator must also supply the input image's source or class label.

5. Dual Video Discriminator GAN (DVD-GAN): Based on the BigGAN architecture, DVD-GAN is a generative adversarial network for producing videos. A spatial discriminator and a temporal discriminator are the two discriminators used by DVD-GAN.

6. SRGAN Its primary purpose, referred to as "Domain Transformation," is to convert low resolution into high resolution.

7. GAN Cycle It is an image translation tool that was released in 2017. Assume that after training it on a dataset of horse photographs, we can convert it to zebra images.

8. Info GAN: An advanced form of GAN that can be trained to separate representation using an unsupervised learning methodology.

Conclusion:

In the realm of machine learning, Generative Adversarial Networks (GANs) are a potent paradigm with a wide range of uses and features. The thoroughness of GANs is demonstrated by this examination of the table of contents, which covers definition, applications, parts, training techniques, loss functions, difficulties, variants, stages of implementation, and real-world examples. GANs have proven to be incredibly effective at producing data that is realistic, improving image processing, and enabling innovative applications. Even with their success, problems like training instability and mode collapse still exist, requiring continued research. However, with the right knowledge and application, GANs have enormous potential to completely transform a variety of fields. navan.ai has a no-code platform - nstudio.navan.ai where users can build computer vision models within minutes without any coding. Developers can sign up for free on nstudio.navan.ai

Want to add Vision AI machine vision to your business? Reach us on https://navan.ai/contact-us for a free consultation.